Configuring Prometheus targets with SaltStack

Prometheus is a pull-based monitoring server. At a high level, you configure it to read metrics from a series of HTTP address, also known as scrape targets. These scrape targets are hosted by various exporters as well as your own applications.

At Backbeat we use SaltStack and Consul to tell it about these targets. In this post we’ll go through some SaltStack techniques, and discuss Consul in a future post.

Static configs

The most basic scrape targets are hard coded with the static_configs option:

scrape_configs:

- job_name: 'node_exporter'

static_configs:

- targets:

- '10.10.10.1:9100'

- '10.10.10.2:9100'

- '10.10.10.3:9100'This tells Prometheus to scrape three IP addresses on port 9100 (the default node_exporter port) with a job name of node_exporter.

Set static config targets using a custom execution module

What if we want to scrape targets that change constantly, e.g. web servers in an autoscaling group? With Salt jinja templating, it’d be ideal to have something like this:

{% set web_ips = salt['get_web_ips']() %}

scrape_configs:

- job_name: 'node_exporter'

static_configs:

- targets:

{% for ip in web_ips %}

- '{{ip}}:9100'

{% endfor %}The tricky part, of course, is implementing the get_web_ips salt function.

Let’s start by adding a custom execution module that returns the hard coded IP addresses.

In your salt directory, create _modules/custom.py:

def web_ips():

return [

'10.10.10.1',

'10.10.10.2',

'10.10.10.3',

]Sync the new module to the Prometheus minion with saltutil.sync_modules, then update the Prometheus config template:

+ {% set web_ips = salt['custom.web_ips']() %}

- {% set web_ips = salt['get_web_ips']() %}

scrape_configs:

- job_name: 'node_exporter'

Success! The target IP addresses will be read from the custom module.

Making the module dynamic with Salt Mine

How do we change the list of IPs returned? For example, with two web minions:

web1.example.com - 10.10.10.1

web2.example.com - 10.10.10.2

Or four web minions:

web1.example.com - 10.10.10.1

web2.example.com - 10.10.10.2

web3.example.com - 10.10.10.3

web4.example.com - 10.10.10.4

Getting this information is easy on the salt master, assuming the eth1 interface:

salt 'web*' grains.get ip4_interfaces:eth1:0

web1.example.com:

10.10.10.1

web2.example.com:

10.10.10.2

web3.example.com:

10.10.10.3

web4.example.com:

10.10.10.4Minions can’t access information about other minions however, so if we configure Prometheus on the stats.example.com minion we won’t be able to do this.

Instead, we need to publish this information to the Salt Mine for other minions to access.

On each web minion, create /etc/salt/minion.d/mine_functions.conf:

# Run mine functions every 60 minutes and when the minion starts

mine_interval: 60

mine_functions:

ip_address:

- mine_function: grains.get

- 'ip4_interfaces:eth1:0'This makes each minion’s IP address available in the ip_address mine entry.

We can access the mine from any minion:

salt stats.example.com mine.get web\* ip_address

stats.example.com:

----------

web1.example.com:

10.10.10.1

web2.example.com:

10.10.10.2Updating our module is simple:

def web_ips():

+ return __salt__['mine.get']('web*', 'ip_address').values()

- return [

- '10.10.10.1',

- '10.10.10.2',

- '10.10.10.3',

- ]

Now the returned IP address will stay up to date. Perfect!

Instance labelling

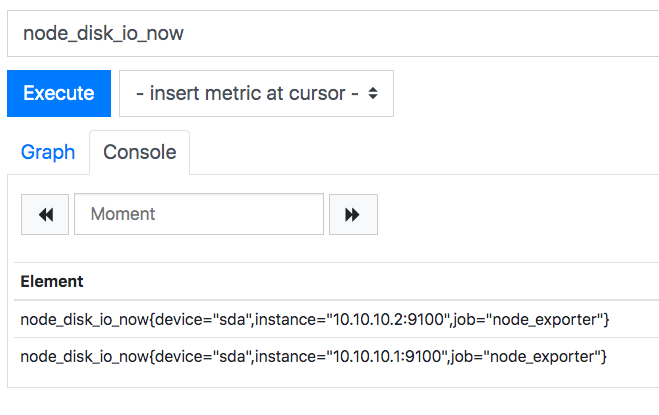

With this configuration, the Prometheus metrics will look something like this:

Humans brains aren’t designed to handle IP addresses, so let’s tweak the configuration to set the instance label to the name of the minion instead.

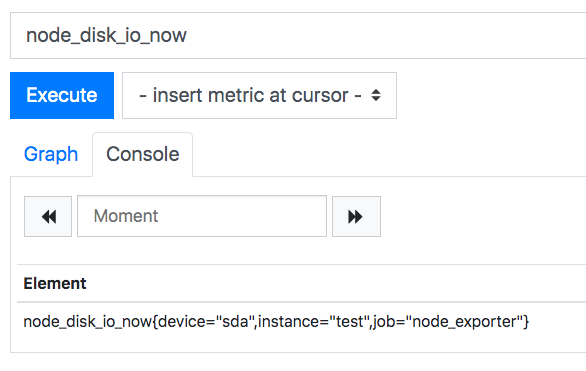

First, override the instance label with a test value:

static_configs:

- targets:

{% for ip in web_ips %}

- '{{ip}}:9100'

{% endfor %}

+ labels:

+ instance: test

It changed the instance label, but we’ve combined two time series into one!

We need a different label for each instance, something the simple labels: option of static_configs can’t manage.

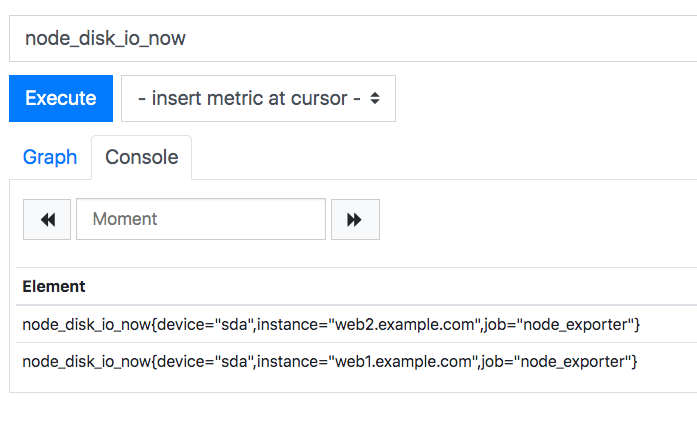

Instead, create a separate static_configs entry for every minion:

static_configs:

+ {% for ip in web_ips %}

+ - targets:

+ - '{{ip}}:9100'

+ labels:

+ instance: '{{ip}}'

+ {% endfor %}

- - targets:

- {% for ip in web_ips %}

- - '{{ip}}:9100'

- {% endfor %}

- labels:

- instance: test

Then tweak the custom module to return both the minion name and IP address:

def web_ips():

+ return __salt__['mine.get']('web*', 'ip_address')

- return __salt__['mine.get']('web*', 'ip_address').values()

static_configs:

+ {% for minion, ip in web_ips.items() %}

- {% for ip in web_ips %}

- targets:

- '{{ip}}:9100'

labels:

+ instance: '{{minion}}'

- instance: '{{ip}}'

{% endfor %}

Much better! Here’s the final Prometheus configuration:

{% set web_ips = salt['get_web_ips']() %}

scrape_configs:

- job_name: 'node_exporter'

static_configs:

{% for minion, ip in web_ips.items() %}

- targets:

- '{{ip}}:9100'

labels:

instance: '{{minion}}'

{% endfor %}Tips and gotchas

- Make sure to update the Prometheus configuration regularly. With Alertmanager active, you’ll receive alerts for minions that have been deliberately destroyed until the Prometheus configuration is updated again.

- Depending on how minions are destroyed, the Salt Mine can sometimes return stale data. This means you may see an IP address of an old minion from

custom.web_ips(), which will be added as a failing Prometheus target. See issues #11389 and #21986 for more information and workarounds. - Adding a Reactor that updates the Prometheus configuration on minion create and destroy events can be a good solution.

Conclusion

With a tiny bit of custom code, we’ve configured Prometheus to scrape a variable amount of minions using the Salt Mine. Mining the IPs of other minions works well for other tools too, such as load balancers and firewalls.

In a future post, we’ll use the consul_sd_configs option and Consul to discover scrape targets. We’ll also cover relabel_configs, a much more powerful technique for changing labels to fit our needs.

Need any help getting your Prometheus stack running? Send us an email!

Photo by

Photo by